Oracle provides via OTN an import solution for FBA (Flashback Data Archive also known as Total Recall). The solution extends the SCN to TIMESTAMP mapping plus provides a wrapper to existing APIs to populate the history. However, issues like using a customized mapping period/precision or ORA-1466 when using the AS OF TIMESTAMP clause are not addressed. I show in this post how to load historical data into flashback archive enabled tables using the standard API. Unfortunately there are still some experimental actions necessary to get a fully functional result, at least with version 11.2.0.3.4.

Test Scenario

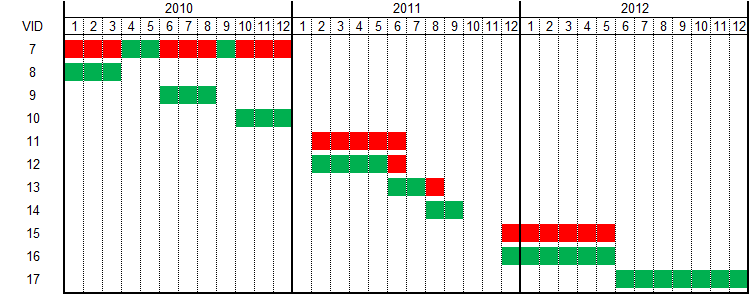

There are various reasons why you may want to load historical data into a FBA enable table. E.g. for testing purposes, to move FBA enabled tables from one database instance to another or to migrate conventionally historized tables. This example is based on a migration scenario. Table T1 has the following content and shall be migrated to FBA. You find the script to create and populate the table T1 here.

SQL> SELECT * FROM t1;

VID OID CREATED_AT OUTDATED_AT C1 C2

--- --- ------------------- ------------------- -- --

1 1 2012-12-19 13:00:57 2012-12-21 08:31:01 A A1

2 1 2012-12-21 08:31:01 2012-12-23 20:58:05 A A2

3 1 2012-12-23 20:58:05 2012-12-27 11:40:41 A A3

4 1 2012-12-27 11:40:41 9999-12-31 23:59:59 A A4

5 2 2012-12-20 13:51:55 9999-12-31 23:59:59 B B1

6 4 2012-12-22 11:03:22 2012-12-23 19:36:08 C C1

7 4 2012-12-28 14:25:50 2012-12-30 17:10:39 C C1

8 4 2012-12-30 17:10:39 2012-12-31 12:05:40 C C2

The column VID is the version identifier and the primary key. OID is the object identifier, which is unique at every point in time. CREATED_AT and OUTDATED_AT define the interval boundaries. Column C1 and C2 are the payload columns, which may change over time.

The following queries return data valid at 2012-12-23 19:36:08 and now.

SQL> SELECT * FROM t1

2 WHERE created_at <= TIMESTAMP '2012-12-23 19:36:08'

3 AND outdated_at > TIMESTAMP '2012-12-23 19:36:08';

VID OID CREATED_AT OUTDATED_AT C1 C2

--- --- ------------------- ------------------- -- --

2 1 2012-12-21 08:31:01 2012-12-23 20:58:05 A A2

5 2 2012-12-20 13:51:55 9999-12-31 23:59:59 B B1

SQL> SELECT * FROM t1 WHERE outdated_at > SYSDATE;

VID OID CREATED_AT OUTDATED_AT C1 C2

--- --- ------------------- ------------------- -- --

4 1 2012-12-27 11:40:41 9999-12-31 23:59:59 A A4

5 2 2012-12-20 13:51:55 9999-12-31 23:59:59 B B1

It’s important to notice that OID 4 (see highlighted line above) is not part of the first query result, since OUTDATED_AT has an excluding semantic. I mention this fact because the column ENDSCN in the table SYS_FBA_HIST_<object_id> uses also an excluding semantic, which simplifies the migration process, at least in this area.

Oracle uses its own time standard SCN for FBA. The SCN is initialized during database creation and is valid for a single Oracle instance, even if synchronization mechanisms among database instances exist (see also MOS note 1376995.1). A SCN may not represent a date-time value before 1988-01-01 00:00:00. The time spent between two SCNs is varying, it may be shorter when the database instance is executing a lot of transactions and it may be longer in more idle times or when the database instance is shut down. Oracle uses the table SYS.SMON_SCN_TIME to map SCN to TIMESTAMPs and vice versa and provides the functions SCN_TO_TIMESTAMP and TIMESTAMP_TO_SCN for that purpose.

So what is the first date-time value which may be converted into a SCN?

SQL> SELECT MIN(time_dp) AS min_time_dp,

2 MIN(scn) AS min_scn,

3 CAST(scn_to_timestamp(MIN(scn)) AS DATE) AS min_scn_to_ts,

4 MAX(dbtimezone) AS dbtimezone,

5 sessiontimezone

6 FROM sys.smon_scn_time;

MIN_TIME_DP MIN_SCN MIN_SCN_TO_TS DBTIMEZONE SESSIONTIMEZONE

------------------- ---------- ------------------- ---------- ---------------

2010-09-05 22:40:05 18669 2010-09-06 00:40:05 +00:00 +01:00

The first value is 2010-09-06 00:40:05. You may notice the two hour difference to MIN_TIME_DP. Oracle stores the date values in this table in UTC (DBTIMEZONE) and my database server’s time zone is CET (Central European Time) which is UTC+01:00 at the point of query (see SESSIONTIMEZONE). However, in September daylight saving time was active and back then CET was UTC+02:00. That explains the two hour difference.

Let’s test the boundary values.

SQL> SELECT timestamp_to_scn(TIMESTAMP '2010-09-06 00:40:05') AS SCN FROM dual;

SCN

----------

18669

SQL> SELECT timestamp_to_scn(TIMESTAMP '2010-09-06 00:40:04') AS SCN FROM dual;

SELECT timestamp_to_scn(TIMESTAMP '2010-09-06 00:40:04') AS SCN FROM dual

*

ERROR at line 1:

ORA-08180: no snapshot found based on specified time

ORA-06512: at "SYS.TIMESTAMP_TO_SCN", line 1As expected 2010-09-06 00:40:05 works and 2010-09-06 00:40:04 raises an ORA-8180 error.

I expect that you get complete different values in your database, since the SMON process deletes “old” values in SYS.SMON_SCN_TIME based on UNDO and FBA configuration. Since this table is solely used for timestamp to SCN conversion I assume it is save to extend it manually. In fact the PL/SQL package DBMS_FDA_MAPPINGS provided by Oracle is exactly doing that, but distributes the remaining SCNs between 1988-01-01 and the MIN(time_dp) in SYS.SMON_SCN_TIME uniformly. In my case there are only 18868 SCNs remaining to be assigned to timestamps before 2010-09-06 00:40:05. So if I know that I won’t need timestamps to be mapped to SCN let’s say before 2010-01-01 I may use the remaining values to improve the precision of timestamp to SCN mappings for this reduced period.

Im my case I do not need to extend the mapping in SYS.SMON_SCN_TIME, but here is an example how it can be done:

SQL> INSERT INTO smon_scn_time (

2 thread,

3 orig_thread,

4 time_mp,

5 time_dp,

6 scn_wrp,

7 scn_bas,

8 scn,

9 num_mappings

10 )

11 SELECT 0 AS thread,

12 0 AS orig_thread,

13 (

14 CAST(

15 to_timestamp_tz(

16 '2010-09-06 00:40:04 CET',

17 'YYYY-MM-DD HH24:MI:SS TZR'

18 ) AT TIME ZONE 'UTC' AS DATE

19 ) - DATE '1970-01-01'

20 ) * 60 * 60 * 24 AS time_mp,

21 CAST(

22 to_timestamp_tz(

23 '2010-09-06 00:40:04 CET',

24 'YYYY-MM-DD HH24:MI:SS TZR'

25 ) at TIME ZONE 'UTC' AS DATE

26 ) AS time_dp,

27 FLOOR((MIN(scn) - 1) / POWER(2, 32)) AS scn_wrp,

28 MOD(MIN(scn) - 1, POWER(2, 32)) AS scn_bas,

29 MIN(scn) - 1 AS scn,

30 0 AS num_mappings

31 FROM sys.smon_scn_time;

1 row created.

SQL> SELECT timestamp_to_scn(TIMESTAMP '2010-09-06 00:40:04') AS scn FROM dual;

SCN

----------

18668TIME_MP is the number of seconds since 1970-01-01. TIME_DP is the date value of TIME_MP. The calculation of these columns includes time zone conversion from CET to UTC which makes it a bit verbose. SCN_WRAP counts the number of times SCN_BASE has reached its 32-bit value maximum of 4294967295.

Alternatively you may simply extend the SYS.SMON_SCN_TIME based on SYS.V$LOG_HISTORY.

INSERT INTO smon_scn_time

(thread,

orig_thread,

time_mp,

time_dp,

scn_wrp,

scn_bas,

scn,

num_mappings)

SELECT 0 AS thread,

0 AS orig_thread,

(first_time - DATE '1970-01-01') * 60 * 60 * 24 AS time_mp,

first_time AS time_dp,

floor(first_change# / power(2, 32)) AS scn_wrp,

MOD(first_change#, power(2, 32)) AS scn_bas,

first_change# AS scn,

0 AS num_mappings

FROM v$log_history

WHERE first_time < (SELECT MIN(time_dp)

FROM smon_scn_time);BTW: The TIMESTAMP_TO_SCN function uses an own kind of result cache. You may need to restart the database if you undo changes in SYS.SMON_SCN_TIME.

Next, we should check if the mapping from timestamp to SCN is precise enough, means a SCN should not be used by multiple timestamps. Here’s the query for T1:

SQL> SELECT COUNT(DISTINCT ts) AS cnt_ts,

2 COUNT(DISTINCT timestamp_to_scn(ts)) AS cnt_ts_to_scn

3 FROM (SELECT created_at ts FROM t1

4 UNION

5 SELECT outdated_at FROM t1

6 MINUS

7 SELECT TIMESTAMP '9999-12-31 23:59:59' FROM dual);

CNT_TS CNT_TS_TO_SCN

---------- -------------

10 10T1 is ready to be migrated to a FBA enabled table if CNT_TS and CNT_TS_TO_SCN are equal. If the values are different you have basically two options. a) amend T1 (e.g. change timestamps or merge intervals) or b) amend SYS.SMON_SCN_TIME as explained above.

Migration

The following script creates a flashback archive and the FBA enabled table T2. At the end a small PL/SQL block is executed to create views for the 3 SYS_FBA_…_<object_id> tables.

-- create FBA

CREATE FLASHBACK ARCHIVE fba TABLESPACE USERS QUOTA 10M RETENTION 10 YEAR;

-- create FBA enabled table t2

CREATE TABLE t2 (

oid NUMBER(4,0) NOT NULL PRIMARY KEY,

c1 VARCHAR2(10) NOT NULL,

c2 VARCHAR2(10) NOT NULL

) FLASHBACK ARCHIVE fba;

-- enforce visibility of SYS_FBA tables

BEGIN

dbms_flashback_archive.disassociate_fba(owner_name => USER, table_name => 'T2');

dbms_flashback_archive.reassociate_fba(owner_name => USER, table_name => 'T2');

END;

/

-- create views on SYS_FBA tables

DECLARE

PROCEDURE create_view(in_view_name IN VARCHAR2,

in_table_name IN VARCHAR2) IS

BEGIN

EXECUTE IMMEDIATE 'CREATE OR REPLACE VIEW ' || in_view_name ||

' AS SELECT * FROM ' || in_table_name;

END create_view;

BEGIN

FOR l_rec IN (SELECT object_id

FROM user_objects

WHERE object_name = 'T2')

LOOP

create_view('T2_DDL_COLMAP','SYS_FBA_DDL_COLMAP_' || l_rec.object_id);

create_view('T2_HIST', 'SYS_FBA_HIST_' || l_rec.object_id);

create_view('T2_TCRV', 'SYS_FBA_TCRV_' || l_rec.object_id);

END LOOP;

END;

/Flashback Query (since Oracle9i) and Flashback Data Archive (since Oracle 11g) are tightly coupled. The SYS_FBA_…<object_id> tables are created delayed by the FBDA background process. DML and querying table T2 is possible anyway with the help of UNDO and Flashback Query. The highlighted lines show the PL/SQL block to enforce the creation of the SYS_FBA…<object_id> tables. This step is necessary to create the views T2_DDL_COLMAP, T2_HIST and T2_TCRV. These views simplify the access to the underlying tables in my SQL scripts.

The next script copies data from table T1 to T2. Basically that’s what the PL/SQL package DBMS_FDA_IMPORT provided by Oracle does.

-- migrate current rows

INSERT INTO t2 (OID, c1, c2)

SELECT OID, c1, c2

FROM t1

WHERE outdated_at > SYSDATE;

COMMIT;

-- enable DML on FBA tables

BEGIN

dbms_flashback_archive.disassociate_fba(owner_name => USER, table_name => 'T2');

END;

/

-- migrate T1 rows into T2

INSERT INTO t2_hist (RID, STARTSCN, ENDSCN, XID, OPERATION, OID, C1, C2)

-- outdated INSERTs (simulating INSERT/DELETE logic)

SELECT NULL AS rid,

timestamp_to_scn(created_at) AS startscn,

timestamp_to_scn(outdated_at) AS endscn,

NULL AS XID,

'I' AS operation,

OID,

c1,

c2

FROM t1

WHERE outdated_at < SYSDATE

-- current INSERTs (workaround for ORA-55622 on insert into T2_TRCV)

UNION ALL

SELECT t2.rowid AS rid,

timestamp_to_scn(t1.created_at) AS startscn,

h.startscn AS endscn,

NULL AS XID,

'I' AS operation,

t2.OID,

t2.c1,

t2.c2

FROM t1 t1

INNER JOIN t2

ON t2.oid = t1.oid

INNER JOIN t2_tcrv h

ON h.RID = t2.rowid

WHERE t1.outdated_at > SYSDATE;

COMMIT;

-- disable DML on FBA tables

BEGIN

dbms_flashback_archive.reassociate_fba(owner_name => USER, table_name => 't2');

END;

/The following queries return data valid at 2012-12-23 19:36:08 and now (as for T1 above).

SQL> SELECT * FROM t2 AS OF SCN timestamp_to_scn(TIMESTAMP '2012-12-23 19:36:08');

OID C1 C2

--- -- --

1 A A2

2 B B1

SQL> SELECT * FROM t2;

OID C1 C2

--- -- --

1 A A4

2 B B1

As you see the results are identical with the ones of T1. The script here compares the result for every single point in time in T1 with T2. I’ve run it without detecting differences.

Migration Issue 1 – ORA-1466 When Using AS OF TIMESTAMP Clause

In the examples above I avoided the use of the AS OF TIMESTAMP clause and used the AS OF SCN clause instead. The reason becomes apparent when executing the following query:

SQL> SELECT * FROM t2 AS OF TIMESTAMP TIMESTAMP '2012-12-23 19:36:08';

SELECT * FROM t2 AS OF TIMESTAMP TIMESTAMP '2012-12-23 19:36:08'

*

ERROR at line 1:

ORA-01466: unable to read data - table definition has changedOk, we have not changed the table definition, but was the table definition for T2 valid on 2012-12-23 19:36:08?

SQL> SELECT column_name,

2 startscn,

3 endscn,

4 CAST(scn_to_timestamp(startscn) AS DATE) AS start_ts

5 FROM t2_ddl_colmap;

COLUMN_NAME STARTSCN ENDSCN START_TS

----------- -------------- ---------- -------------------

OID 161382016632 2013-01-03 00:17:42

C1 161382016632 2013-01-03 00:17:42

C2 161382016632 2013-01-03 00:17:42

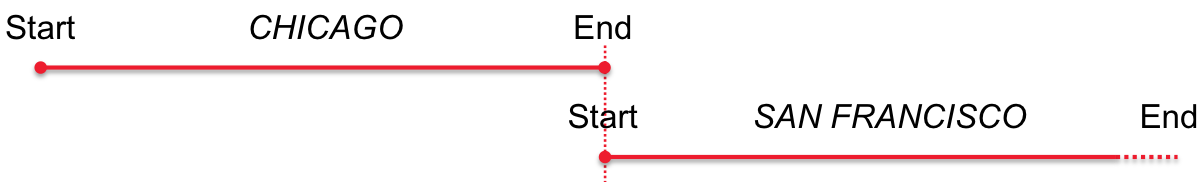

Since I’ve created the table on 2013-01-03 00:17:42 Oracle assumes that no columns exist before this point in time. However, the AS OF SCN clause is not that picky.

To fix the problem we need to update T2_DDL_COLMAP but unfortunately DBMS_FLASHBACK_ARCHIVE.DISASSOCIATE_FBA does not allow us to change the content of the DDL_COLMAP directly (it is possible indirectly by altering the HIST table, but this is not helpful in this case).

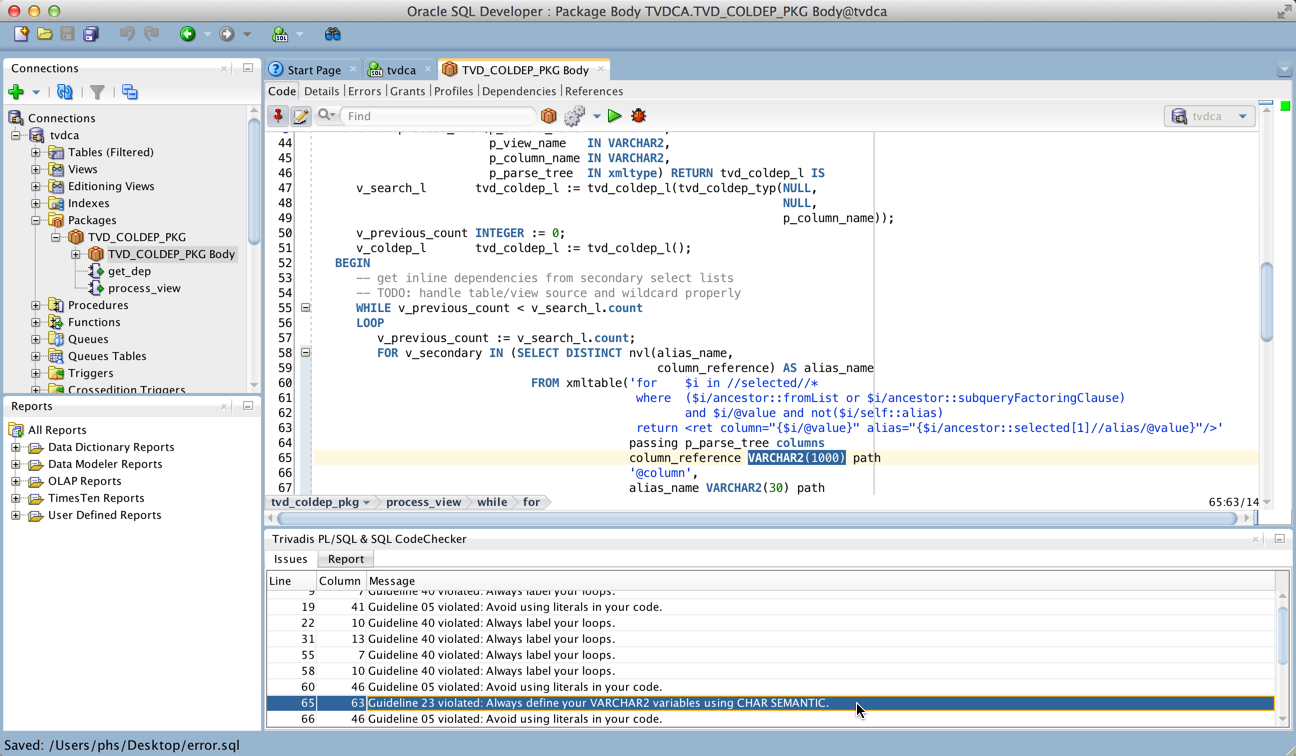

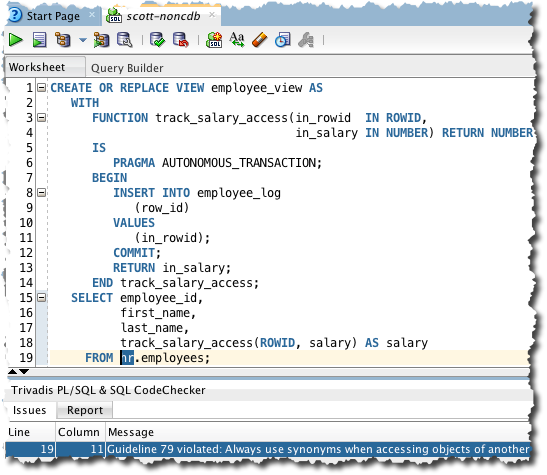

I’ve written a PL/SQL package TVD_FBA_HELPER which updates SYS.TAB$ behind the scenes to overcome this restriction. Please consult Oracle Support how to proceed if you plan to use it in productive environments. The package is provided as is and of course you are using it at your own risk.

Here is the script to fix validity of the DDL_COLMAP:

-- enable DML FBA table

BEGIN

tvd_fba_helper.disassociate_col_map(in_owner_name => USER, in_table_name => 'T2');

END;

/

-- enforce ddl colmap consistentcy (valid for first entries)

UPDATE t2_ddl_colmap

SET startscn =

(SELECT MIN(startscn)

FROM t2_hist);

COMMIT;

-- disable DML an FBA tables

BEGIN

tvd_fba_helper.reassociate_col_map(in_owner_name => USER, in_table_name => 'T2');

END;

/Now the AS OF TIMESTAMP clause works as well:

SQL> SELECT * FROM t2 AS OF TIMESTAMP TIMESTAMP '2012-12-23 19:36:08';

OID C1 C2

--- -- --

1 A A2

2 B B1

Migration Issue 2 – Different Number of Intervals

If you query the full history of T2 you get 10 rows, but T1 contains 8 rows only.

SQL> SELECT ROWNUM AS vid, OID, created_at, outdated_at, c1, c2

2 FROM (SELECT OID,

3 TO_DATE(TO_CHAR(versions_starttime, 'YYYY-MM-DD HH24:MI:SS'),

4 'YYYY-MM-DD HH24:MI:SS') AS created_at,

5 NVL(TO_DATE(TO_CHAR(versions_endtime, 'YYYY-MM-DD HH24:MI:SS'),

6 'YYYY-MM-DD HH24:MI:SS'),

7 TIMESTAMP '9999-12-31 23:59:59') AS outdated_at,

8 c1,

9 c2

10 FROM t2 VERSIONS BETWEEN TIMESTAMP TIMESTAMP '2012-12-19 13:00:57'

11 AND SYSTIMESTAMP

12 ORDER BY 1, 2);

VID OID CREATED_AT OUTDATED_AT C1 C2

--- --- ------------------- ------------------- -- --

1 1 2012-12-19 13:00:53 2012-12-21 08:20:19 A A1

2 1 2012-12-21 08:20:19 2012-12-23 20:58:03 A A2

3 1 2012-12-23 20:58:03 2012-12-27 11:40:40 A A3

4 1 2012-12-27 11:40:40 2013-01-03 00:50:19 A A4

5 1 2013-01-03 00:50:19 9999-12-31 23:59:59 A A4

6 2 2012-12-20 13:51:54 2013-01-03 00:50:19 B B1

7 2 2013-01-03 00:50:19 9999-12-31 23:59:59 B B1

8 4 2012-12-22 10:26:35 2012-12-23 19:36:07 C C1

9 4 2012-12-28 14:25:50 2012-12-30 17:10:38 C C1

10 4 2012-12-30 17:10:38 2012-12-31 12:05:39 C C2

The highlighted rows for OID 1 and 2 could be merged. The content is not really wrong it’s just different to T1. The reason is, that DBMS_FLASHBACK_ARCHIVE.DISASSOCIATE_FBA does not allow us to modify T2_TCRV (SYS_FBA_TCRV…<object_id> table). This table contains validity information for the current rows in T2.

SQL> SELECT rid,

2 startscn,

3 CAST(scn_to_timestamp(startscn) AS DATE) AS start_ts,

4 endscn,

5 op

6 FROM t2_tcrv;

RID STARTSCN START_TS ENDSCN O

------------------ -------------- ------------------- ---------- -

AAAZ1PAAIAAAAb0AAA 161382018095 2013-01-03 00:50:19 I

AAAZ1PAAIAAAAb0AAB 161382018095 2013-01-03 00:50:19 I

That’s why I had to inserted the actual rows also in T2_HIST.

But with the help of the PL/SQL package TVD_FBA_HELPER the data may be fixed as follows:

-- enable DML on FBA tables

BEGIN

dbms_flashback_archive.disassociate_fba(owner_name => USER, table_name => 'T2');

tvd_fba_helper.disassociate_tcrv(in_owner_name => USER, in_table_name => 'T2');

END;

/

-- extend begin of validity in TCRV table and fix HIST table accordingly

MERGE INTO t2_tcrv t

USING (SELECT rid, startscn FROM t2_hist b) s

ON (s.rid = t.rid)

WHEN MATCHED THEN

UPDATE SET t.startscn = s.startscn;

DELETE FROM t2_hist WHERE rid IN (SELECT rid FROM t2_tcrv);

COMMIT;

-- disable DML an FBA tables

BEGIN

tvd_fba_helper.reassociate_tcrv(in_owner_name => USER, in_table_name => 'T2');

dbms_flashback_archive.reassociate_fba(owner_name => USER, table_name => 'T2');

END;

/Now the query returns 8 rows:

SQL> SELECT ROWNUM AS vid, OID, created_at, outdated_at, c1, c2

2 FROM (SELECT OID,

3 TO_DATE(TO_CHAR(versions_starttime, 'YYYY-MM-DD HH24:MI:SS'),

4 'YYYY-MM-DD HH24:MI:SS') AS created_at,

5 NVL(TO_DATE(TO_CHAR(versions_endtime, 'YYYY-MM-DD HH24:MI:SS'),

6 'YYYY-MM-DD HH24:MI:SS'),

7 TIMESTAMP '9999-12-31 23:59:59') AS outdated_at,

8 c1,

9 c2

10 FROM t2 VERSIONS BETWEEN TIMESTAMP TIMESTAMP '2012-12-19 13:00:57'

11 AND SYSTIMESTAMP

12 ORDER BY 1, 2);

VID OID CREATED_AT OUTDATED_AT C1 C2

--- --- ------------------- ------------------- -- --

1 1 2012-12-19 13:00:53 2012-12-21 08:20:19 A A1

2 1 2012-12-21 08:20:19 2012-12-23 20:58:03 A A2

3 1 2012-12-23 20:58:03 2012-12-27 11:40:40 A A3

4 1 2012-12-27 11:40:40 9999-12-31 23:59:59 A A4

5 2 2012-12-20 13:51:54 9999-12-31 23:59:59 B B1

6 4 2012-12-22 10:26:35 2012-12-23 19:36:07 C C1

7 4 2012-12-28 14:25:50 2012-12-30 17:10:38 C C1

8 4 2012-12-30 17:10:38 2012-12-31 12:05:39 C C2

The timestamps are slightly different to the ones in T1, but that’s expected behavior since the precision of TIMESTAMP_TO_SCN conversion is limited to around 3 seconds.

Conclusion

Loading historical data into FBA enable tables requires a strategy to populate historical mappings in SYS.SMON_SCN_TIME. Afterwards you may load the associated SYS_FBA_HIST_<object_id> table with the help of the Oracle supplied PL/SQL package DBMS_FLASHBACK_ARCHIVE and its procedures DISASSOCIATE_FBA and REASSOCIATE_FBA. I recommend this approach for 11gR2 Database environments.

The solutions to fix migration issue 1 (ORA-1466 when using AS OF TIMESTAMP clause) and migration issue 2 (different number of intervals) are considered experimental. I suggest to contact Oracle Support to discuss how to proceed if you need a solution in this area.

Updated on 10-APR-2014, changed calculation of SCN_WRP and SCN_BAS in Extend SMON_SCN_TIME (1) and Extend SMON_SCN_TIME (2), changed link to new version of TVD_FBA_HELPER.